Speech Recognition in Scratch 3 - turning Hello into Bonjour!

The Raspberry Pi Foundation recently released a programming activity Alien Language , with support Dale from Machine Learning for Kids , tha...PS3 Controller to move a USB Robot Arm

Guest Blogger Hiren Mistry, Nuffield Research Placement Student working at the University of Northampton. How to use a PS3 Controller to...Scratch Robot Arm

It is not physical but CBiS Education have release a free robot arm simulator for Scratch. Downloadable from their site http://w...Tinkercad and Microbit: To make a neuron

The free online CAD (and so much more) package Tinkercad https://www.tinkercad.com/ under circuits; now has microbits as part of the list ...Escape the Maze with a VR robot - Vex VR

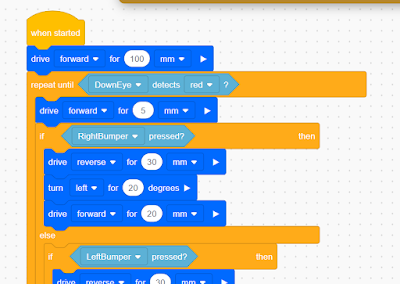

You don't need to buy a robot to get programming a robot, now there are a range of free and relatively simple to start with robot simula...Easy, Free and no markers Augmented Reality - location based AR

For a few years, I have been a fan of Aframe and AR.js - these are fantastic tools for creating web-based Virtual and Augmented Reality. No...Coral Dev Board and Raspberry Pi

This is the second of a planned occasional series of posts on playing with some of the current AI specific add-on processors for Intenet of ...Explaining the Tinkercad microbit Neural network

In a previous post, I looked at developing a neural network in Tinkercad around the Microbit (details available here ) and the whole model ...VR robot in a maze - from Blocks to Python

Recently I produced a post about playing with Vex Robotics VexCode VR blocks and the Maze Playground. The post finished with me saying I w...4tronix Eggbit - cute and wearable - hug avoider

/ The ever-brilliant 4tronix have produced Eggbit https://shop.4tronix.co.uk/collections/microbit-accessories/products/eggbit; a cute, wear...

Robots and getting computers to work with the physical world is fun; this blog looks at my own personal experimenting and building in this area.

Friday 31 December 2021

Top 10 viewed posts 2021 on the Robot and Physical Computing Blog

Sunday 26 December 2021

Hug Avoider 4 - micropython, Eggbot and speech

- pin8 - Green button

- pin12 - Red button

- pin14 - Yellow button

- pin`6 - Blue button

Thursday 23 December 2021

Hug Avoider 3 - experiments with Python and 4Tronix Eggbit

4Tronix's Eggbit (in fact I bought three of them https://shop.4tronix.co.uk/collections/bbc-micro-bit/products/eggbit-three-pack-special :-) recently) is a cute add-on for the microbit (see above). In two previous posts I looked at eggbit using microcode to produce a hug avoider - warns when people at too close.

- Get the ultrasound to find the distance;

- Produce smile and surprise on the eggbit's 'mouth';

- Produce rainbow on the neopixels or all the pixels turning red;

- Bring it all together so if the person is too close, less than 30cm it reacts.

Tuesday 29 June 2021

Microbit and Environment Measurement - Using Python

Sunday 14 March 2021

Initial experiments with Code Bug Connect

Code Bug has been around for a while, and it is incredibly cute, When it first came, it was a very interesting piece of kit - and it is still is and fun to play with. It spec means it is still a very useful piece of kit.

- 5x5 Red LED display

- 2 buttons

- 6 touch sensitive I/O pads (4 input/output, power and ground)

- Micro USB socket

- CR2032 battery holder

- Expansion port for I2C, SPI and UART

- Blockly-based online programming interface

- CodeBug emulator for checking code before downloading

In 2020 Code Bug launched and successfully funded a Kickstarter campaign (https://www.kickstarter.com/projects/codebug/codebug-connect-cute-colourful-and-programmable-iot-wearable ) for a new version the Code Bug - CodeBug Connect with a serious upgrade.(and the name Connect is highly appropriate with USB tethering and Wifi capability in this version. The technical specification (taken from their site https://www.kickstarter.com/projects/codebug/codebug-connect-cute-colourful-and-programmable-iot-wearable ) shows how much of an upgrade this is:

- 5x5 RGB LEDs with dedicated hardware driver/buffer

- Two 5 way navigation joysticks

- Onboard Accelerometer

- 4 GPIO legs, including high impedance sensing for detecting touch (think MaKey MaKey TM)

- 6 Sewable/croc-clip-able loops. 4 I/O including analogue 1 power and ground

- 6 pin GPIO 0.1" header (configurable for UART/I2C/SPI, I2S or analogue audio out)

- QuadCore -- four heterogeneous processors

- 4MB Flash Storage

- 2.4GHz WiFi 802.11 b/n/g, Station and Soft AP (simultaneous)

- Experimental long range wireless 0.8km to another CodeBug Connect

- UART terminal access over USB

- High efficiency SMPS Boost convertor for battery (JST PH connector)

- High efficiency SMPS Buck convertor from 5V USB

Recently the early version of the Connects have been arriving and it is cool (IMHO).

The getting going guide https://cbc.docs.codebug.org.uk/gettingstarted/quickstart.html lives it up to its name and does a better explanation of doing this than I can provide here.

First I played with the USB and the blockly style programming tool https://www.codebug.org.uk/newide/ (see above) essentially producing a very slightly modified version of their starter code. You can perhaps see the Python style coming in with the while True coming in. Works well and it showed one of the different between this version and the older one; the LEDs are now colourful instead of red only. Programming it, while using the laptops USB to power it does lead to pulling the cable in and out to get the code to run - but that is fine and is clearly explained in the guide

import

cbc

from

color import Color

import

time

while

True:

cbc.display.scroll_text(str(" Bug

1"), fg=Color('#f0ff20'))

time.sleep(1)

They have even thought about security. I set my system to connect via wifi through my phone; but when I want to connect through my laptop I had to go through the adoption process to try it on my phone and a laptop - sounds scary but it is well explained in the getting going guide and is relatively simple to do.

Looking forward to exploring the device a lot more, the guide also includes a number of code examples to play with and explore. A feature I particularly liked was seeing the block code rendered as python when using the editor on the phone.

Monday 22 February 2021

VR robot in a maze - from Blocks to Python

Sunday 21 February 2021

Escape the Maze with a VR robot - Vex VR

Saturday 1 September 2018

Build a Disco cube:bit that reacts to music.

Essentially the idea is the vibrations from the music shake the micro:bit enough to give measurable changes in three axis, and these values are used to change the pixel's colour - in fact five pixels at a time.

The code shown below is all that was needed:

from microbit import *

import neopixel, random

np = neopixel.NeoPixel(pin0, 125)

while True:

for pxl in range (2,125, 5):

rd=int(abs(accelerometer.get_x())/20)

gr=int(abs(accelerometer.get_y())/20)

bl=int(abs(accelerometer.get_z())/20)

np[pxl] = (rd, gr, 0)

np[pxl-1] = (rd, gr, 0)

np[pxl+1] = (0, gr, rd)

np[pxl-2] = (rd, 0, 0)

np[pxl+2] = (0, gr,0)

np.show()

Here it is in action:

The music used in the video is

Please feel free to improve on this.

All opinions in this blog are the Author's and should not in any way be seen as reflecting the views of any organisation the Author has any association with. Twitter @scottturneruon

Monday 20 August 2018

Getting Crabby with EduBlock for Microbit

The BinaryBots Totem Crab is available at https://www.binarybots.co.uk/crab.aspx

Here I going to use Edublocks (https://microbit.edublocks.org/) by @all_about_code to control the claw of the Crab to close when button A is pressed (and display a C on the LEDs) and open the claw when button B is pressed. For a discussion on the Crab and what the pins are, etc goto http://robotsandphysicalcomputing.blogspot.com/2018/08/crabby-but-fun.html for more details.

The timing of the opening and closing is controlled by how long the C or O takes to scroll across the LEDs. As an aside, but I found it interesting (it appeals to my geekiness), if you save the blocks, using the Save button; it stores it as an XML file, an example extract is shown below:

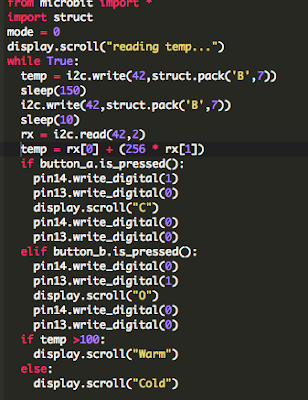

Now I want to explore a little the Python editor in Edublocks; to see if it can be used to expand the range of activities. The code as it stands now:

Using some code developed by CBiS Education/ BinaryBots I have added some code to read the Crab's temperature sensor and display "Warm" or "Cold" depending on this. The code uses the struct module to convert between strings of bytes (see https://pymotw.com/2/struct/) and native Python data types. to work with the I2C bus which the Crab sensors use (more details on the bus can be found https://microbit-micropython.readthedocs.io/en/latest/i2c.html ). The code below was then download as a hex file to the microbit as before.

The Crab's reads in the temperature and displays either message "Warm" or "Cold" - currently repeatedly "Warm". The open and closing of the claws still works.

So this was a double win, I had a chance to explore whether the Edublocks Python works as advertised and it does and an opportunity to play with the Crab a bit more; a definite win-win.

Acknowledgement: Thank you to Chris Burgess and the team at Binary Bots/CBiS Education for sending me a copy of the Python code for accessing the sensors on the Crab.

All opinions in this blog are the Author's and should not in any way be seen as reflecting the views of any organisation the Author has any association with. Twitter @scottturneruon

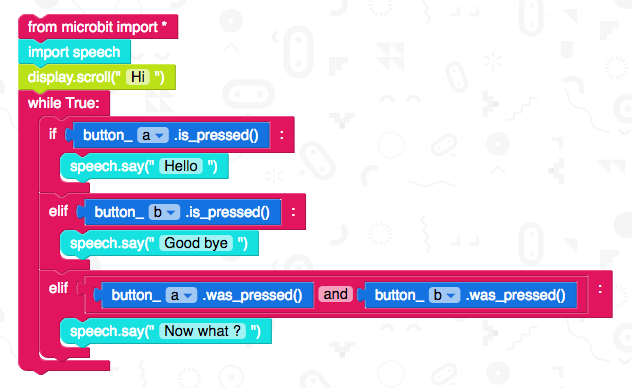

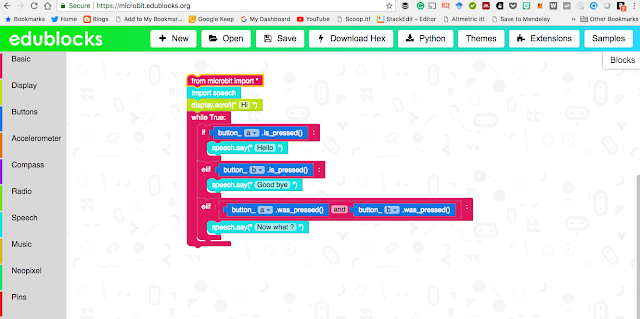

Speech with EduBlocks on BBC microbit

Edublocks for the microbit (and Edublocks in general) allows graphical blocks of code, in a similar way to languages such as Scratch, to be dragged and dropped into places. That in itself would be great, but the really useful thing here is though, whilst doing it you are actually producing a Python program (technically in the microbit case micropython)- a good way (as others have said before e.g https://www.electromaker.io/blog/article/coding-the-bbc-microbit-with-edublocks ) of bridging the gap between block based programming tand text-based programming language (ie. Python). Added to this is the support for Python on the microbit and the things like speech, access the pins and neopixels you have a really useful and fun tool.

Talk is cheap (sort of!)

The project shown here is getting the microbit to 'talk' using speech. I have attached a microbit to Pimoroni's noise bit for convenience (https://shop.pimoroni.com/products/noise-bit) but equally, alligator wires and headphones could be used (https://www.microbit.co.uk/blocks/lessons/hack-your-headphones/activity ). The routine below allows when button A on the microbit is pressed the Microbit (through a speaker) to say Hello, B say Good bye and when both pressed Now what ? Simple but fun.

They are essentially the same.

Here is a video of it in action:

Thoughts.

As you might have gathered I think this Edublocks for the microbit is a fantastic tool. I am planning my new experiments with it now- coming soon to this blog. Edublocks for the microbit is not all Edublocks can do, the project itself can be found at https://edublocks.org/ is well worth a look. For playing with the microbit for the first time with Python I would recommend Edublocks for the microbit https://microbit.edublocks.org/

All opinions in this blog are the Author's and should not in any way be seen as reflecting the views of any organisation the Author has any association with. Twitter @scottturneruon

Top posts on this blog in March 2024

The Top 10 viewed post on this blog in March 2024. Covering areas such as small robots, augmented reality, Scratch programming, robots. Micr...